An Introduction to Organic Neural Networks

By Nicolas Gatien on 2024-04-21

Artificial Intelligence

Artificial Intelligence (AI) has disrupted many industries since the inception of the field. In recent years, deep learning models and Artificial Neural Networks (ANNs), models inspired by how the human brain processes information, have been at the forefront of the disruptive force.

This inspiration continues to guide the development of AI today. Many leading AI companies such as Meta AI, OpenAI, character.ai, and Google Deepmind, aim to build artificial general intelligence (AGI). Often envisioned as an AI capable of doing any intellectual task to the same degree of competence as an expert human.

As groundbreaking as deep learning models have been, they have their shortcomings.

Learning takes a lot of exposure

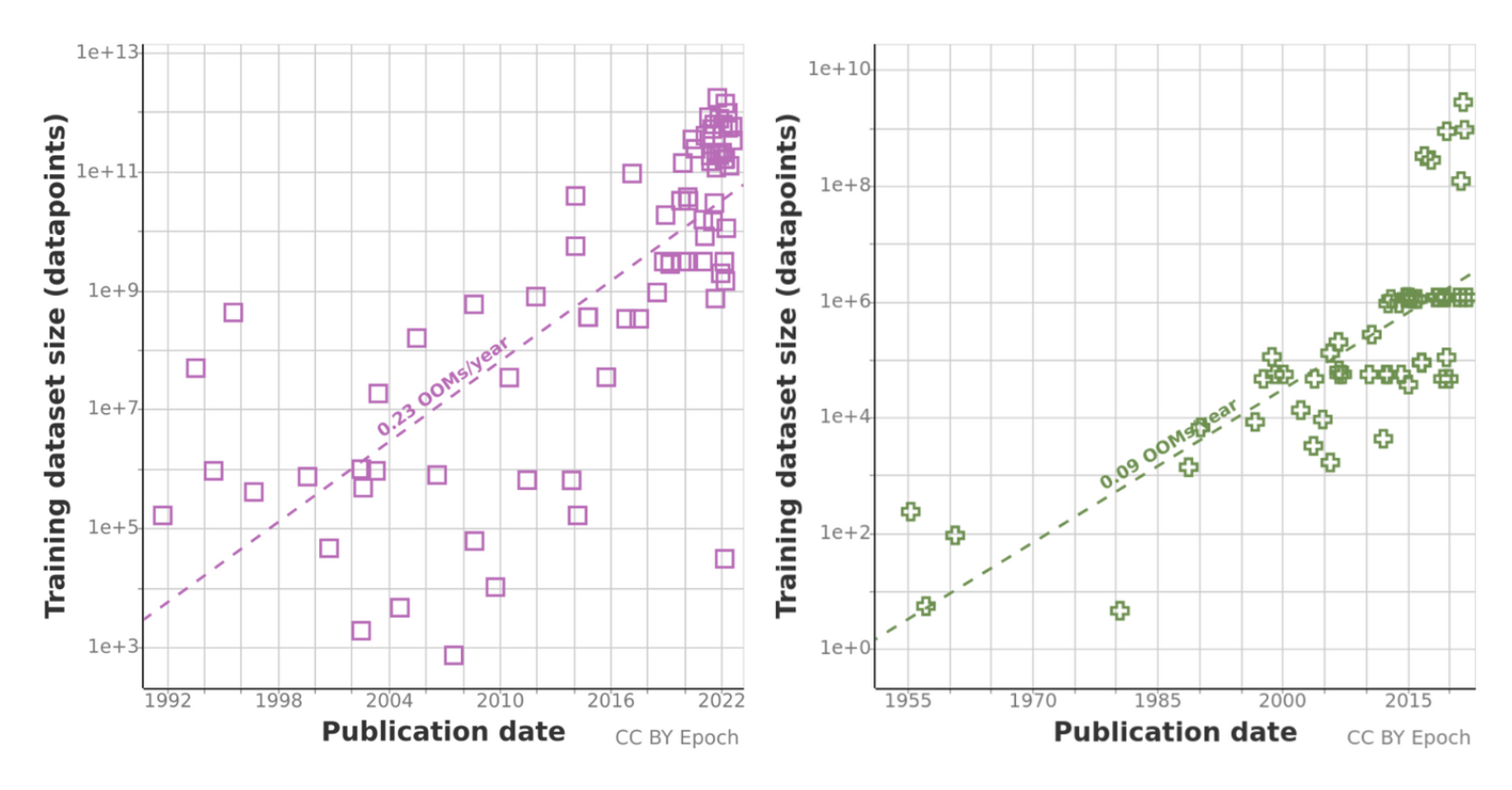

Deep learning models must experience the same data points many times before forming a basic understanding. This is manifested in the huge datasets that are required to teach complex tasks to deep learning models successfully.

The size of these datasets is growing year over year. For example, on the left graph below is the size of modern language datasets over time, with recent data sets having over a trillion data points. On the right, is the same graph but for image datasets, a similar trend of growing size is followed. [1]

In the case of language, that is more than the number of words you will speak in your entire life.

Generalization is low

Even after having been exposed to large quantities of data, the span of generalization remains low. There is some generalization otherwise the entire premise of deep learning would be pointless, but a model's performance in a task greatly depends on the number of examples of that task in its training dataset.

A recent preprint has shown that even in incredibly large multi-model models, the performance on a "never before seen task" heavily depends on the number of examples of that task in the dataset. Increasing the performance on zero-shot tasks requires exponentially more data for improvement. [2]

Lots of Computational Power

Training a deep learning model takes a lot of computational power. As a result, it takes a lot of power and becomes unreasonably expensive for any individual to train a foundation model.

For example, OpenAI's GPT-3 model costs upwards of $5 million in GPUs to train. [3] Training a model like GPT-3 can take up to 1,300 megawatt hours (MWh) of electricity, which is enough to power 130 US homes. [4] Or another measure, since the average adult brain consumes 0.3 kilowatt hours per day, [5] training GPT-3 consumed enough power to sustain 143 human brains from birth to death. (power consumed per day * days in a year * average life expectancy in Canada)

Organic Neural Networks

Organic Neural Networks (ONNs) are fabricated networks of organic neurons. Up until now, scientists have been able to teach ONNs to fly airplanes, [6] control simple robots [7] and play Pong [8]. Though this research is still in its early stages, I have found it fascinating.

General Overview

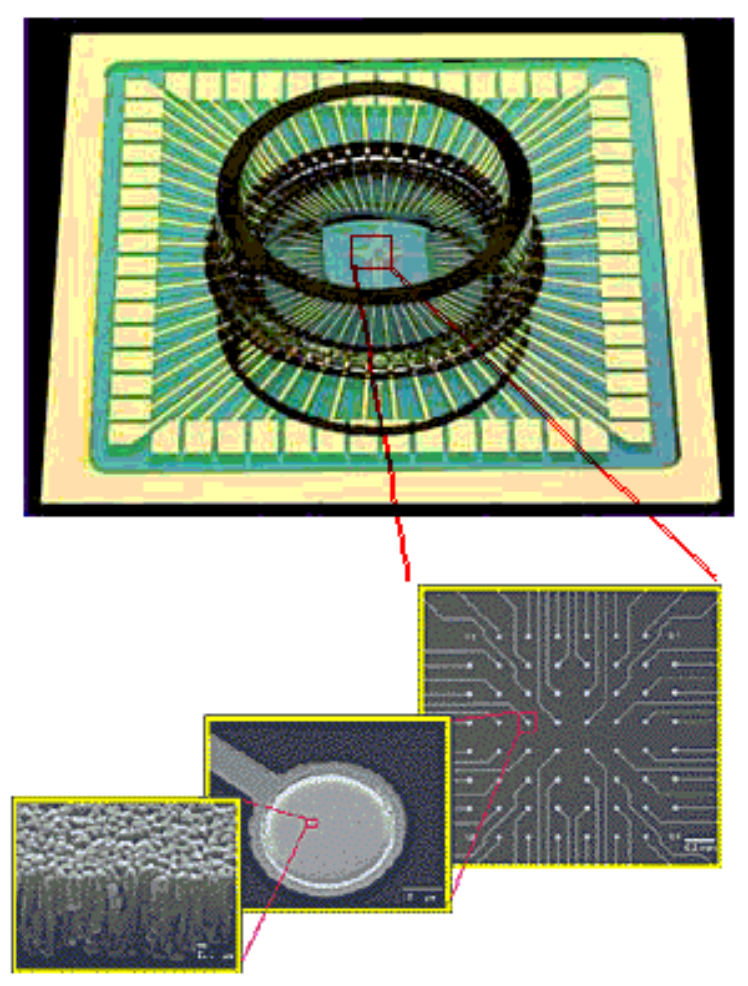

The current approach is to grow a network of neurons (a brain) on a flat sheet of plastic that is connected to a multi-electrode array. Since neurons communicate with electrical pulses, different areas of the multi-electrode array are dedicated to send signals, and another area to receive signals. [6][7]

How to Grow a Brain

Neurons are the main type of computation cell within your brain. Unlike many other cells, neurons don't undergo mitosis, and so are unable to grow in numbers. In this case, growing a brain is not about growing the cells within the brain, but creating the connections and pathways between those cells.

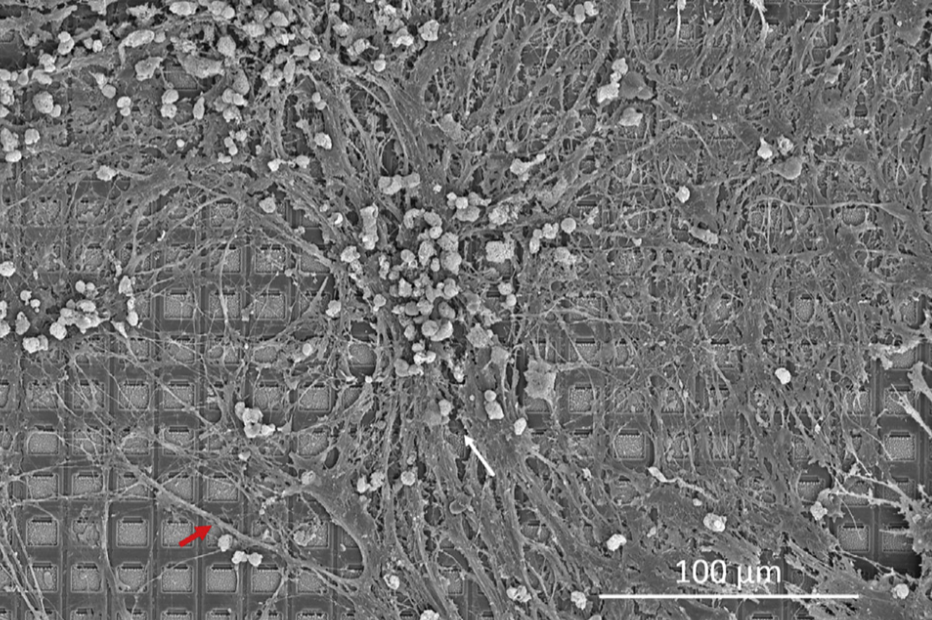

For connections to form, they need neurons. These cells are collected from the cerebral cortex of rodent embryos such as rats or mice. [6][8] In newer projects, a type of engineered stem cell is used to grow into neurons instead. Along with neurons, necessary glial cells are also collected and placed in a tissue culture dish. [6] This is a glass or plastic container that offers an environment for cells to grow.

Once inside the containers, the cells are supplemented with culture media. Culture media can be thought of as food for cells, it's a liquid or gel that contains nutrients for cells to ingest. Neurons consume these nutrients and then spread their dendrites looking for other neurons to connect with. The culture media is swapped about once per week to keep the cells healthy. [12]

After 3-5 days, the neurons become active, and after 10 days the networks themselves begin to experience periodic short bursts of activity. [6] These short bursts of energy will continue through the network's entire lifespan.

What is a Multi-Electrode Array

To understand the multi-electrode array (MEA), you must first understand the electrode. An electrode is a conductor, meaning it can transfer electricity. You can think of it as a bridge. The electrode does not generate electricity, and it does not record electricity, it simply transfers electricity in and out.

An MEA is a group of electrodes used to act as a large bridge between the neurons and external signals.

How to Attach Brain to Multi-Electrode Array

To act as a proper bridge, the MEA must be connected to both sources. The brains that have been grown are *in vitro*, meaning they are outside of an organism. In fact, the brains are grown in a little container with one of the sides being the MEA itself. The neurons attach directly to the electrodes and their signals can then be recorded.

Stimulation and Recording

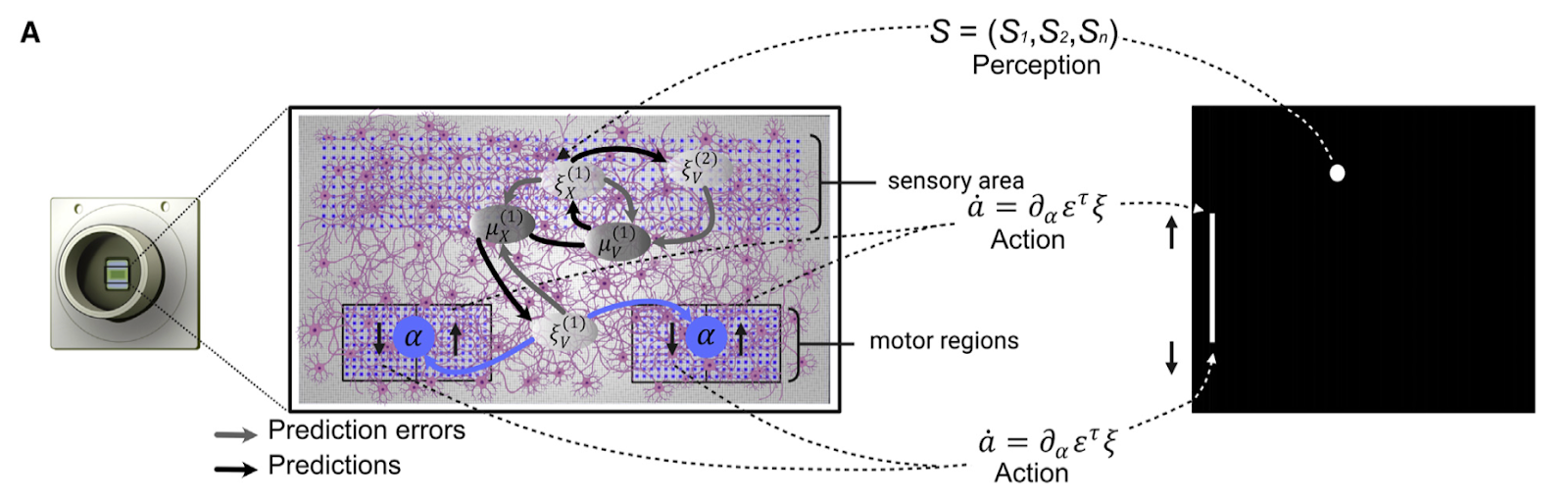

This sheet of electrodes can then transfer the electrical signals from the neural activity to a computer. In the pong playing ONN named *DishBrain* they separated their MEA into three sections.

One section was used to send data from the pong game to the ONN, and the other two sections were used to record data from the *DishBrain* to determine how to move the paddle.

The top area of the MEA was used for inputs, and the two smaller areas were used as motor regions for the paddle. [8]

Though the neurons become spontaneously active after a few days in the dish, when connected to a simulated environment, they are not arranged to complete any objective. To train an ONN, the neurons themselves cannot be tuned, instead, the predictability of the inputs is adjusted based on its actions.

Similarly to the process of training an ANN, ONNs have a cost function. ONNs are constantly attempting to predict the sensory data they will receive. [9] To make the real data match their predictions, they can either tune their "beliefs" about the world, or they can take actions to move the world closer to their "beliefs". This cost function is built into the network and is not something that can currently be accessed.

Instead, to train a network, it is rewarded with predictable sensory inputs when it takes a "correct" action, and punished with unpredictable sensory inputs when it takes an "incorrect" action. In the case of pong, whenever the paddle hits the ball and sends it back to the other side, the network is rewarded with a sequence of evenly spaced pulses. Whereas if the ball hit the back wall past the paddle, it would receive random noise, then the ball would reset and go in a random direction. [8]

Since the network is trying to minimize the cost function, it will aim to receive more predictable inputs.

From this Pong experiment, scientists were able to determine that ONNs are able to gather more information and form more concrete patterns in less time than ANNs. It takes *DishBrain* 10-15 Pong rallies to match an ANN that has done 5000 rallies. [10][11] In most articles reporting about this, it's stated that the ONN is learning faster, but it's worth noting that the ANN might be able to do all 5000 rallies before DishBrain can complete the 10-15. Also, the ONN seems to hit a wall faster than our current ANN architectures because even though it can learn with fewer examples, it can still be beaten by a fully trained ANN.

Implications on AGI

Authors of DishBrain suggest it is possible generalized organic intelligence will be reached before AGI. [8] If this were to happen, it may actually be safer than digital intelligence as it will still need to deal with the confines of biology. It will not be able to easily duplicate itself, it won't be able to share memory very easily, and it won't be able to recursively self-upgrade at an exponential rate.

An organic general intelligence may lead to a slower takeoff which may be easier to control and possibly even align.

Future Work

At the time of this writing, there are a few papers that are in pre-print format that build on the research done by *DishBrain* and the plane flying ONN.

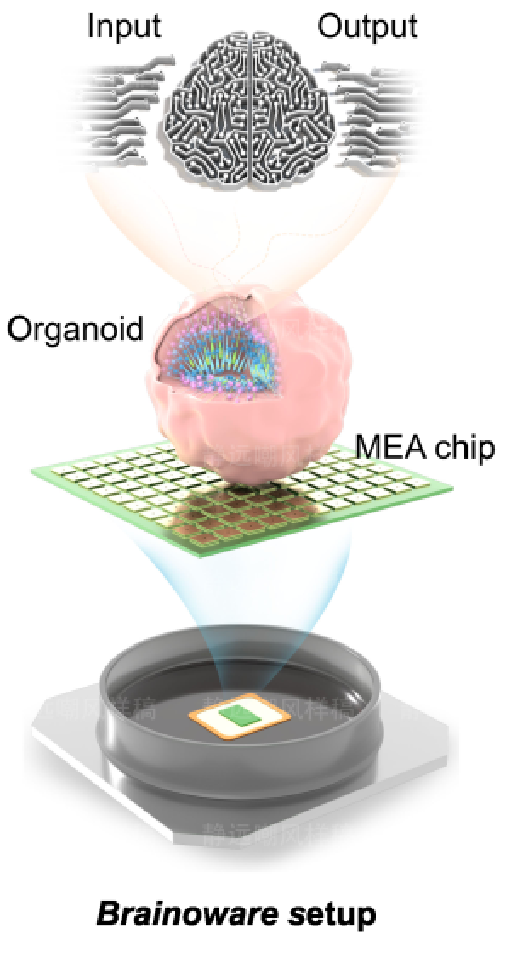

The main difference is the use of cerebral organoids. The ONNs I have written about are flat networks that span across a sheet of electrodes.

A cerebral organoid is a collection of brain cells that have been grown to a 3D structure, thus allowing for more complex computations to take place. A very similar framework is used to get inputs and outputs into and out of the cerebral organoid, but instead of being a flat network, it is placed on top of the sheet of electrodes. [13]

The use of a 3D structure allows for more complex computation and has allowed *Brainoware* (the name of this cerebral organoid) to predict the path of a non-linear chaotic function. [13]

This is important because it demonstrates a certain amount of memory. Predicting this function requires knowledge of the previous data points, meaning the 3D structure is able to keep information in a kind of memory to perform a task.

Conclusion

Organic Neural Networks (ONNs) use real brain cells placed on a flat sheet of electrodes (Multi-Electrode Array, MEA) to perform tasks. These networks will optimize for predictable inputs, meaning they can be rewarded with recognizable patterns and punished with random noise. This is a new technology with plenty of unknowns that still need exploring.

References

Key: 🔬 Academic Article, 📺 Youtube Video, 📖 Book, 📰 Online Article

[1]: 📰Trends in Training Dataset Sizes[2]: 🔬No “Zero-Shot” Without Exponential Data: Pretraining Concept Frequency Determines Multimodal Model Performance

[3]: 📰What Large Models Cost You – There Is No Free AI Lunch

[4]: 📰How much electricity does AI consume?

[5]: 📰How much energy do we expend using our brains?

[6]: 🔬Adaptive Flight Control With Living Neuronal Networks on Microelectrode Arrays

[7]: 📺Robot controlled by a Rat Brain

[8]: 🔬In vitro neurons learn and exhibit sentience when embodied in a simulated game-world

[9]: 📖 How Emotions Are Made - Lisa Feldman Barret

[10]: 📰Human brain cells in a dish learn to play Pong faster than an AI

[11]: 📰Scientists taught a petri dish of brain cells to play pong faster than an AI

[12]: 📺Rat Neurons Grown On A Computer Chip Fly A Simulated Aircraft

[13]: 🔬Brain Organoid Computing for Artificial Intelligence**